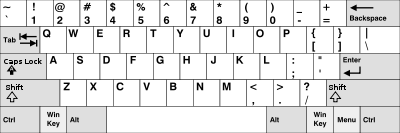

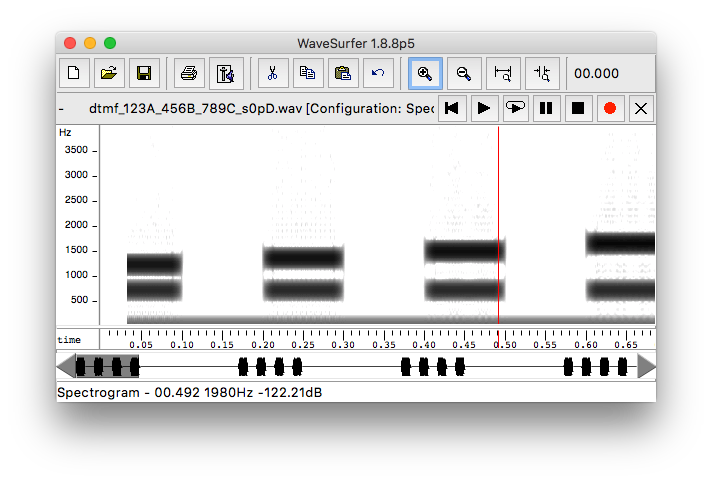

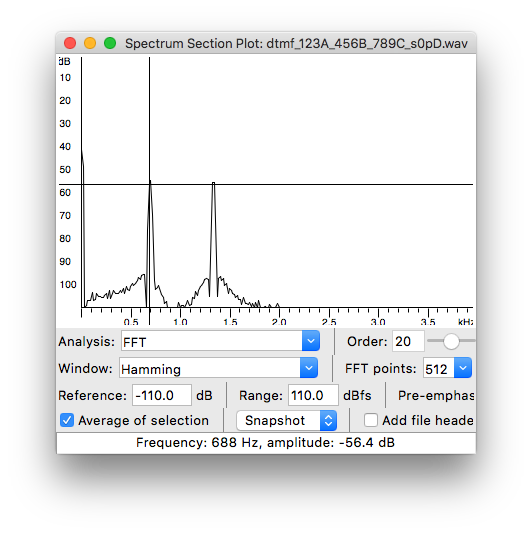

layout: true <footer> <span class="icon github"> <svg version="1.1" class="github-icon-svg" xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" x="0px" y="0px" viewBox="0 0 16 16" enable-background="new 0 0 16 16" xml:space="preserve"> <path fill-rule="evenodd" clip-rule="evenodd" fill="#C2C2C2" d="M7.999,0.431c-4.285,0-7.76,3.474-7.76,7.761c0,3.428,2.223,6.337,5.307,7.363c0.388,0.071,0.53-0.168,0.53-0.374c0-0.184-0.007-0.672-0.01-1.32c-2.159,0.469-2.614-1.04-2.614-1.04c-0.353-0.896-0.862-1.135-0.862-1.135c-0.705-0.481,0.053-0.472,0.053-0.472c0.779,0.055,1.189,0.8,1.189,0.8c0.692,1.186,1.816,0.843,2.258,0.645c0.071-0.502,0.271-0.843,0.493-1.037C4.86,11.425,3.049,10.76,3.049,7.786c0-0.847,0.302-1.54,0.799-2.082C3.768,5.507,3.501,4.718,3.924,3.65c0,0,0.652-0.209,2.134,0.796C6.677,4.273,7.34,4.187,8,4.184c0.659,0.003,1.323,0.089,1.943,0.261c1.482-1.004,2.132-0.796,2.132-0.796c0.423,1.068,0.157,1.857,0.077,2.054c0.497,0.542,0.798,1.235,0.798,2.082c0,2.981-1.814,3.637-3.543,3.829c0.279,0.24,0.527,0.713,0.527,1.437c0,1.037-0.01,1.874-0.01,2.129c0,0.208,0.14,0.449,0.534,0.373c3.081-1.028,5.302-3.935,5.302-7.362C15.76,3.906,12.285,0.431,7.999,0.431z"/> </svg> </span> <a href="https://github.com/sikoried"><span class="username">sikoried</span></a> </footer> --- # Sequence Learning ## Cost and States Korbinian Riedhammer --- # Today's Menu ### Better auto-correct using custom cost functions ### From edit distance to DTW with feature data ### From pair-wise DTW to state-based decoding --- # Better Auto-Correct  [source: wiki](https://commons.wikimedia.org/wiki/File:KB_United_States.svg#/media/File:KB_United_States.svg) - Compute cost of substitution by proximity of keys - Map keys to grid, compute euclidean distance - What about for example `g <> h`, or `a <> l`? Outlook: [Damerau-Levenshtein](https://en.wikipedia.org/wiki/Damerau%E2%80%93Levenshtein_distance#Distance_with_adjacent_transpositions) with adjacent transpositions. --- # DTW with Feature Data Edit distance between two waveforms? .left[ <img src="/sequence-learning/02-cost-and-states/wvf1.png" style="width:45%"> reference ] .right[ test utterance <img src="/sequence-learning/02-cost-and-states/wvf2.png" style="width:45%"> ] --- # DTW with Feature Data - Raw sample data way to numerous - Compute spectral (cepstral) features, eg. [MFCC](https://en.wikipedia.org/wiki/Mel-frequency_cepstrum) - Use euclidean distance on feature vectors - Uniform cost for substitution/deletion/insertion ## Isolated Word Recognition - Have prototype recordings for each word - Compute "distance" between test recoding and prototype words - Choose for word with least difference --- # Decoding States with DP  --- # Decoding States with DP  --- # Decoding States with DP - Don't compare to _individual class prototypes_ - Compare to _class prototypes_ - Since we relaxed the time constraint on the prototype axis, you must compute the minimum for all state transitions.